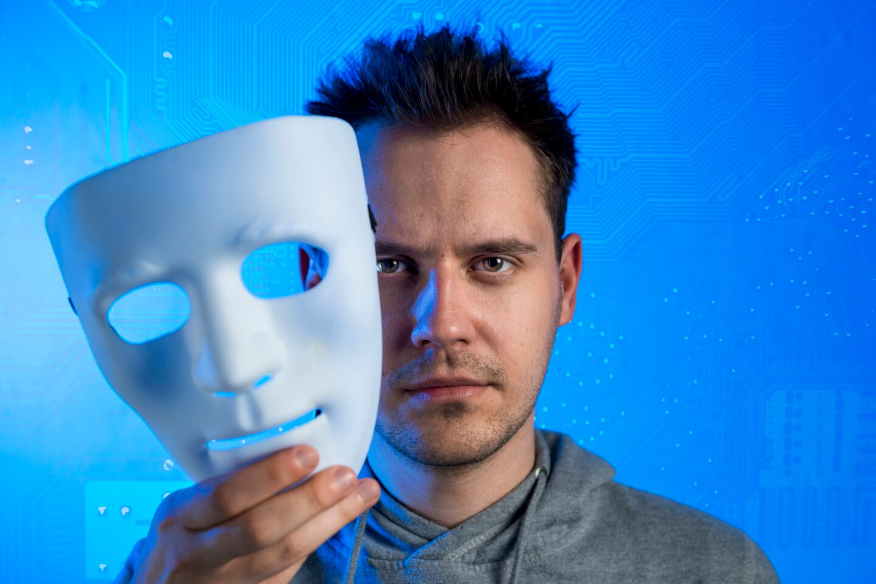

Understanding how deepfakes and synthetic media erode trust at scale helps explain why their impact extends far beyond individual fake videos.

Deepfakes and synthetic media are not just new tools for deception; they are catalysts for a broader breakdown in trust. As audio, video, and images become easier to fabricate convincingly, the assumption that seeing or hearing something makes it real no longer holds. This shift affects journalism, politics, personal relationships, and legal systems simultaneously.

What Makes Deepfakes Different From Past Misinformation

Misinformation has always existed, but deepfakes change its credibility. Text-based falsehoods require belief. Synthetic media simulates evidence.

When a video appears to show a person speaking or acting, it bypasses skepticism. Visual and auditory cues trigger instinctive trust that written claims do not.

This realism raises the cost of verification and lowers the barrier to manipulation.

Explore How Disinformation Campaigns Spread Across Countries for cross-border manipulation insights.

How Synthetic Media Scales So Quickly

Advances in AI models allow rapid generation of convincing media with minimal technical skill. What once required specialized teams can now be done by individuals using widely available tools.

Distribution platforms amplify reach. A single piece of synthetic media can be replicated, altered, and shared across networks in minutes.

Scale turns isolated deception into systemic risk.

The Erosion of “Proof” in Public Discourse

Deepfakes undermine the concept of proof itself. When real footage can be dismissed as fake and fake footage can appear real, evidence loses clarity.

This ambiguity benefits bad actors. Plausible deniability becomes a weapon. Truth competes with doubt rather than falsehood.

Public debate shifts from “what happened” to “can we trust anything at all.”

Political and Social Consequences

In politics, synthetic media threatens elections and diplomacy. Fake statements attributed to leaders can inflame tensions or influence voters before verification catches up.

Socially, trust between individuals erodes. Audio scams impersonating loved ones exploit emotional vulnerability. Reputations can be damaged instantly.

These harms scale faster than traditional remedies can address.

Read The Global Election Watch: What International Observers Actually Do for election integrity context.

Why Detection Alone Isn’t Enough

Technical detection tools improve, but they face inherent limits. As generation methods evolve, detection becomes a moving target.

Relying solely on detection creates a perpetual arms race. Even accurate tools cannot restore trust once doubt spreads.

Trust depends on systems, not just technology.

Platform Responsibility and Friction

Platforms play a critical role. Policies that slow sharing, label uncertain content, or require provenance add friction that reduces spread.

Friction changes behavior. When sharing is not instantaneous, manipulation loses some power.

However, enforcement varies, and incentives often favor engagement over caution.

See What ‘Developing Story’ Really Means in Breaking News for more on media framing.

The Role of Verification and Provenance

Provenance systems aim to track how media is created and modified. Watermarks, metadata, and cryptographic signatures can signal authenticity.

These systems help establish trust at the source rather than chasing fakes downstream.

Adoption remains uneven, limiting effectiveness.

Why Trust Breaks Faster Than It Rebuilds

Trust is asymmetric. It takes time to build and moments to destroy. Synthetic media exploits this imbalance.

Once audiences internalize the idea that media may be fake, skepticism becomes the default. Rebuilding confidence requires transparency and consistency.

The cost of mistrust is collective.

How Institutions Are Affected

Courts, newsrooms, and governments rely on evidence. Synthetic media forces new standards of verification and admissibility.

Institutions must invest in training and tools while managing public perception.

Failure to adapt risks legitimacy.

Check out AI Regulation Worldwide: Who’s Restricting What (and Why) for context on governance responses.

What a Post-Trust Environment Looks Like

In a low-trust environment, people retreat to familiar sources and beliefs. Polarization deepens as shared reality fragments.

Deepfakes do not just spread lies; they weaken the foundations that make truth persuasive.

This shift is harder to reverse than any single falsehood.

What Deepfakes Reveal About the Information Age

Deepfakes reveal a transition point. Information abundance no longer guarantees clarity.

Trust must be designed, supported, and defended as infrastructure. Without it, scale becomes a liability rather than a strength.

Understanding this helps explain why synthetic media is less about fake content and more about fragile systems.